By Sally Pritchett

CEO

We're uniting communicators to navigate the ever-evolving AI landscape. Here, we share insights from our most recent 'Navigating AI Together' roundtable.

AI can be a scary topic. For many the opportunity for a big productivity boost is sitting alongside fears around role replacement and the devaluing of skills. When we heard these concerns from fellow communicators, we knew we needed to help. Our ‘Navigating AI Together’ forum is supporting communicators to manage the transition to AI together, safely, ethically, and with positive and open mindsets.

In our most recent session of Navigating AI Together, we delved into the current state of AI, strategies for enhancing communication accessibility and inclusivity, the importance of AI transparency, and even took a deep dive into the fascinating realm of AI voice cloning.

In previous sessions we have:

- Explored the role AI in communications, including the challenges and opportunities.

- Shared a guide to prompting, tailored to communication professionals.

- Discussed the creation of policies for responsible AI usage and user accountability.

- Provided a review of Midjourney.

- Examined AI-assisted video creation.

The current state of AI

In the latest ‘Navigating AI together’ session we reflected on the rapid growth of AI, especially with the recent emergence of generative AI, symbolised by ChatGPT’s meteoric rise. With the genie now out of the bottle, the AI landscape is evolving exponentially, leading to an influx of uses, applications, and platforms. However, experts are suggesting that we may not experience another technological leap of this magnitude anytime soon. Our collective responsibility is now to maximize AI’s potential while adhering to clear usage guidelines and responsible practices.

Notably, a concerning statistic has emerged, revealing that 73% of users trust content generated by generative AI. This really shows the importance of close supervision in AI-generated content, especially in the communication field, where reliability and truthfulness are paramount.

As for legislation and regulation, while it may be in development, progress will be slow and may not fully address the intricacies of AI usage. We believe that it’s time for the communications industry to take the lead in setting its own guidelines for AI usage.

However, despite all the disruption, there is a positive note. AI is already making a substantial positive impact in various domains, from improving cancer screening to addressing climate change, conserving wildlife, and fighting world hunger. The potential for AI to do good is vast and exciting.

How AI can enhance communication accessibility

Our role as communication professionals includes making our communication as effective as possible and ensuring it is accessible to everyone. Currently, fewer than 4% of the world’s top million websites are accessible to people with disabilities. AI can help accelerate progress in this regard by simplifying and enhancing communication:

- Copy simplification with AI editors – Tools like Hemmingway can simplify and improve readability, making communication more accessible.

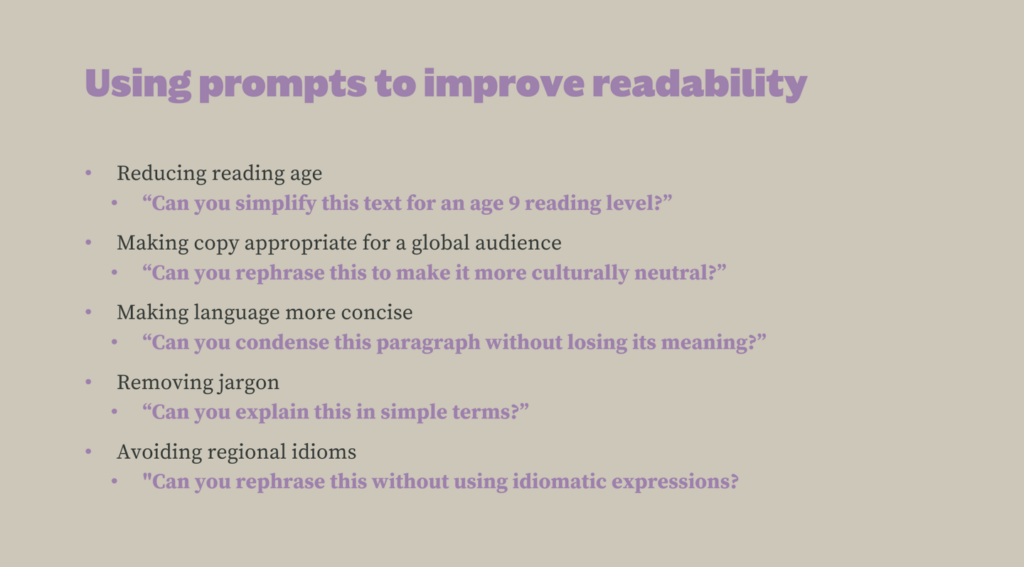

- AI prompts for readability – Using AI prompts to tailor text for different reading levels, cultures, and languages.

- Digital signing – New platforms in development, like Signapse, will be able to create sign language from text or audio, making communication accessible to people who use sign language.

- Translation – AI can help level up and speed up translation, providing accurate and natural translation, learning from feedback over time.

- Image descriptors – Tools like Verbit can create audio descriptions of images in real-time.

- Text to Speech / Speech to Text – Enhances accessibility for various communication modes.

- Multi-Lingual Chatbots – Assist in multilingual communication.

The importance of AI transparency

During the forum we addressed the significant issue of transparency in AI. We posed thought-provoking questions to the group about transparency and shared considerations for when, how, and whether to be transparent in our AI usage. Our conversations showed that transparency in AI isn’t a straightforward, black-and-white matter; rather, it exists on a spectrum with shades of grey. Determining when to be transparent about our use of AI can pose significant challenges.

Regulation has already driven the need for transparency in various digital domains, such as cookies on websites and advertisements in social media. Just as privacy regulations have mandated the disclosure of cookie usage, AI may could follow a similar path. Users may come to expect clear information about when AI is involved in content generation, and it may be time for communicators to rise to this challenge without the need for legislation.

AI voice cloning

Voice cloning tools use AI and deep learning algorithms to replicate and mimic human voices. These tools analyse the vocal characteristics, pitch, tone, and nuances of a person’s voice from a sample of audio recordings. Once trained, they can generate speech that closely resembles the original speaker’s voice, allowing for the creation of natural-sounding, synthetic voices.

AI voice cloning has numerous applications, from creating realistic voice assistants to generating voiceovers for content. However, it also raises ethical concerns about potential misuse and impersonation, highlighting the need for responsible use and regulation in this rapidly advancing field.

We investigated several voice cloning tools and shared our findings with the group’s attendees. If you’d like to find out more then please reach out.

Navigating AI together: overcoming bias and achieving inclusion

In our next session we will examine the issue of the biases embedded in AI and explore how we can mitigate this bias and harness AI tools to foster greater inclusion and authentic representation.

Let’s continue Navigating AI Together, ensuring responsible, ethical, and inclusive AI usage in our communication practices.