Exploring fresh graphic design in creative communications

By Sally Pritchett

CEO

Explore the freshest trends in graphic design and find inspiration to create communications that connect with your audience.

As spring is blooming all around us, there’s no better time to infuse your creative communications with the spirit of renewal and growth. In this article we’re sharing what’s been inspiring us lately and examining some of the design trends we’re excited to see.

Sustainability and purpose-driven branding

Sustainable design is not a trendy, niche approach, it’s fast becoming a core principle. Consumers are looking for brands that align with their values and have a clear social or environmental mission. Brands that can effectively communicate their purpose and demonstrate their commitment to making a positive impact are likely to resonate. Customers are placing more value on products and services that prioritise sustainability, with studies finding that 81% of consumers believe brands should actively work to protect the environment.

From a design style perspective, we’re seeing a lot more thought go into packaging. Not just classic recyclable material and eco postage, but packaging being reusable or rethinking it by giving it a second life.

Sustainability conscious styling is starting to shift away from cliche ‘green’ to using a wider palette of nature through muted blues, beige and pinks to represent sustainability alongside the classic textured, earthy feel.

Amble Outdoors | Sustainable clothing

Amble Outdoors | Sustainable clothing

As well as seeing these visual changes, the messaging is diving deeper. As a more well-informed audience which has growing concern over climate change and other environmental issues, consumers are increasingly looking for brands that prioritise sustainability. Messages and campaigns are bold and calling people out when they’re greenwashing or contributing to negative sustainable practices, working towards a force for change.

Make My Money Matter | Oblivia Coal Mine

The continued rise of AI

AI has made a huge impact on the marketing world over the last year. While the creative industry may feel under pressure from generative AI tools, it has also never felt so full of potential and scope for growth. AI is streamlining the design process, providing insights and generating multiple styles quickly. And AI is not going anywhere! AI is only going to get bigger and better, especially as us as humans learn to control it and manipulate it.

In this recent survey by It’s Nice That, 83% of creatives in the industry are already using AI tools. There are still ethical concerns around bias – which still need a human eye to carefully consider outputs – and legal concerns around plagiarism but the technology will undoubtedly continue to improve.

Midjourney outputs

Midjourney outputs

Embracing nostalgia

Fashion repeats itself every twenty years or so, and this holds true in design too. The content of nostalgia marketing changes with the generations, as each generation has different cultural references. Gen Z may look to cultural references from the ’90s or early ‘00s, whereas Gen X or older millennials might feel nostalgic about the ’80s and its cultural touchpoints.

We have seen a huge re-emergence of ’90s inspired designs stemming from the iconic Barbie and Mean Girls movies allowing many of us to embrace the childhood nostalgia. This is paving the way for vibrant colours, abstract shapes, funky patterns, and scrapbooking to name a few. These design styles create an emotional bond with the audience, this especially aligns with Gen Z’s growing digital fatigue and their desire for meaningful experiences and authenticity.

Cadburys | Yours for 200 years

Bold type

Sometimes text needs to do the talking. In a world where there is so much visual information thrown at us and video/social media can be so intrusive, a simple message is effective. More campaigns are using simple type to tell the message and make an impact. Simplicity is key. Clean imagery is being used but enhanced by the typography.

B&Q | You can do it

B&Q | You can do it

Inclusive design

Inclusive design that is both diverse and accessible has rightly become a staple. Ranging from high-contrast graphics, clear typography, alt text for images, and diverse and inclusive messaging.

Inclusive design enables us to talk to global audiences, breaking down barriers and challenging stereotypes.

Nike | This athlete isn’t just an athlete she’s a mother too.

Nike | This athlete isn’t just an athlete she’s a mother too.

Impactful communications that connect with people

It’s clear that the world of graphic design is always changing and we’re seeing exciting trends shaping the way we communicate.

But amidst all this change, one thing stays the same: our commitment to making impactful designs that connect with people. Whether it’s telling your brand’s story or spreading a message, we’re here to help. If you’re looking to get cut through with your creative communications, reach out to us. Let’s work together to make something that makes a lasting impression.

Navigating AI together: the risks of plagiarism and protecting IP

By Sally Pritchett

CEO

In a thought-provoking session on AI, plagiarism and IP, we unravelled the challenges facing communicators using generative AI.

In our most recent ‘Navigating AI together’ session, we went on a journey to explore the legal challenges around the use of generative AI tools. We were delighted to be joined by Alex Collinson from Herrington Carmichael, a seasoned expert in commercial and intellectual property law.

The purpose of the ‘Navigating AI together’ series is to foster a community dedicated to responsible AI usage and to work through these challenges with clarity and integrity, together. In this recap of our discussion, we’re shining a light on the issues around AI and charting a path towards ethical and informed AI usage.

What do we mean by intellectual property, copyright and trademarks?

Many of us are likely guilty of using language like intellectual property, copyright and trademarks almost interchangeably. But to understand the legal landscape around AI, we need to get these terms nailed down.

- Intellectual property (IP): refers to creations of the mind, such as inventions, literary and artistic works, designs, symbols, names, and images used in commerce. IP is protected by laws which give the creators or owners exclusive rights to use their creations or discoveries for a certain period of time.

- Copyright: Copyright grants exclusive rights to creators of original works, such as books, music, and software, allowing them to control reproduction, distribution, and other uses for a limited time. Copyright acts as a deterrent to those who may wish to profit from reproduction, and can be licensed which can create a revenue stream for your business.

- Trademark: Trademarks protect words, symbols, or designs that distinguish goods or services, providing exclusive rights to their owners to prevent confusion among consumers and maintain brand identity.

Common copyright misconceptions

Even before we get into the new, complex and unclear realm of generative AI, Alex shared with us that there are a lot of common misconceptions:

- “Everything on the Internet is free to use”: Contrary to popular belief, just because something is freely available online doesn’t mean it’s in the public domain. Users must be cautious about where they obtain information and how they use it.

- “My name is protected by copyright”: Names and symbols can’t be protected by copyright; instead, they fall under trademark law. Trademarks prevent others from using similar names or symbols that could cause confusion in the marketplace.

- “You have to register your copyright to protect it”: Under English law, there’s no requirement to register copyright. Copyright protection automatically applies to works once they’re created, including artistic and literary works.

What the law says about generative AI

While the law is not quite up to speed with generative AI, Alex shared some interesting case examples to help us better understand the legal landscape.

Copyright

Alex shared with us a high-profile case, Getty Images (US) Inc v Stability AI Ltd [2023] EWHC 3090 (Ch), that highlights the difficulty generative AI is causing within copyright law. Getty Images alleged that Stability AI unlawfully scraped images from other websites to train its AI model, resulting in infringement of copyright and trademarks.

Despite Stability AI seeking summary judgment (without a full trial), the court refused, recognising the novel issues surrounding AI and copyright law. These legal nuances reveal the need for the law to adapt to the evolving landscape of AI technology, with more cases expected to emerge.

It is possible that copyright could be infringed when AI systems are trained, if this process involves copying a substantial part of copyright works that are still within the terms of protection. However, the issue regarding whether copying will be deemed to have occurred where a generative AI system has been trained on copyright works is not straightforward.

Potential issues include:

- Proving the use of copyrighted works in training AI: proving that copyrighted works were used to train and develop a generative AI system poses challenges due to the lack of transparency in data usage.

- Jurisdictional issues: determining the location of training and development activities, especially in cases involving online communication, can be complex. Although even if the activities occurred outside the UK but targeted the UK public, copyright law may still apply.

- Permitted acts under copyright law: Assessing whether any infringement occurred involves considering permitted acts outlined in the Copyrights Act. These acts include fair dealing defences, such as criticism, review, quotation, parody, caricature, and pastiche, which provide exceptions to copyright restrictions.

Trademarks

Given how new generative AI is and the evolving legal landscape, there are very limited case studies available. However, Alex explained how longer existing AI applications may help us to understand potential future legal implications.

AI-driven recommendation systems, commonly used in e-commerce, analyse user data to suggest products based on preferences and behaviour. Amazon, for instance, employs such AI technology.

In the case of Cosmetic Warriors and Lush v Amazon ([2014]EWHC 181 (Ch)), Amazon was found to have used Lush products and branding on its website to attract customers and recommend other products without Lush’s consent. Lush claimed trademark infringement, asserting that its intellectual property rights were violated, with the court ruling in its favour. This case highlights the importance of protecting trademarks within the digital marketplace.

The ethical dilemma

While the law hasn’t yet adapted to the emergence of generative AI, as communicators, we can’t afford to sit back and wait while these tools that can enhance creativity and productivity are available to us. With the ongoing legal uncertainties, it’s up to us to evaluate the ethical implications of our communications practices.

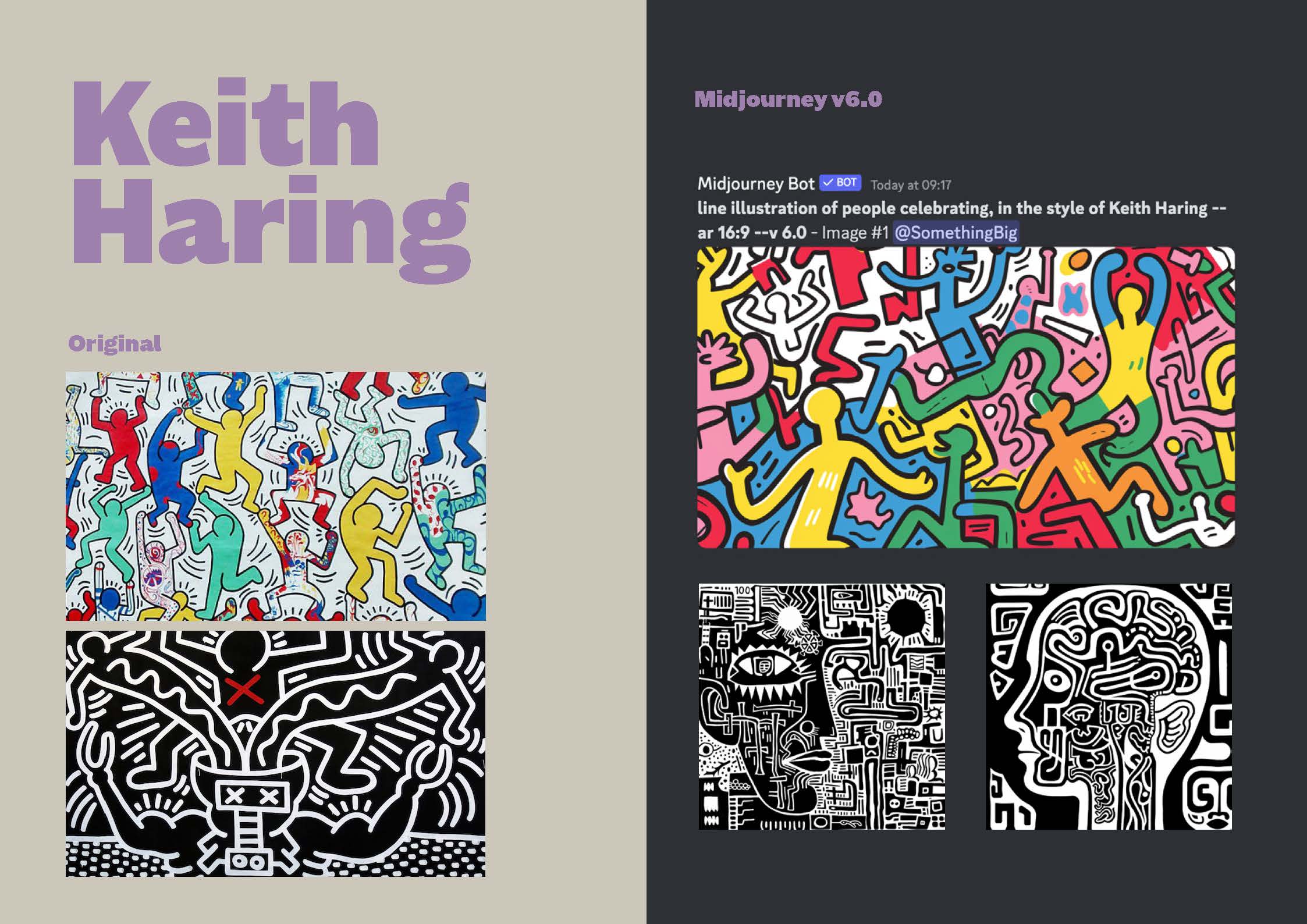

Consider a scenario shared during our session, involving Keith Haring’s artwork and an AI-generated copy produced on Midjourney, a licensed platform. Keith Haring is perhaps most famous for his art used in the iconic Change4Life public health campaign.

The Keith Haring Foundation, a charitable organisation dedicated to children in need and those affected by HIV/AIDS, holds the rights to Haring’s iconic artwork.

However, we posed a hypothetical situation where the government wants to commission a new public health campaign, once again using Haring’s renowned illustrative style. As our Midjourney example shows, instead of licensing art from the Keith Haring Foundation, they could potentially use AI-generated images.

This capability raises ethical and legal concerns regarding intellectual property rights. Despite the AI’s capability to mimic Haring’s style, using these images without consent from the Keith Haring Foundation could potentially infringe upon their IP rights. Not to mention the ethical impact of not licencing the images from a charitable foundation.

This example reflects the importance of ethics when incorporating AI-generated content into communication strategies, while we wait for the law to catch up.

We’d like to thank Alex for guiding us through this complex topic. If you’d like to explore the legal implications of generative AI further, we encourage you to connect with Alex on LinkedIn to stay updated on future discussions and insights.

Navigating AI together

Our upcoming session will delve into the role AI should play in marketing efforts and consider how marketers can best leverage AI to streamline tasks and free up valuable time.

If you’d like to be kept in the loop for details on this insightful discussion, get in touch.

4 steps to making generative AI an ally to inclusion

By Sally Pritchett

CEO

Discover four practical steps to mitigate bias in generative AI and ensure inclusive, authentic representation in your AI-powered communications.

AI offers amazing opportunities for communicators, from writing creative content to bringing to life a visual idea in a matter of seconds. But its power comes with a challenge: inherent biases.

Generative AI has been trained on human-created content, and so has inherited deep-seated bias built in. This bias can, and often does, unintentionally permeate AI-generated content, reinforcing stereotypes and misconceptions.

So as the human hands guiding AI, what can we do to help overcome these biases and use AI as a tool to foster inclusion and authentic representation?

1. Nurturing AI as responsible guides

It’s important that we hold the hand of AI and safely guide it as generative AI learns from the language we use. We need to ensure we understand the EDI landscape ourselves thoroughly first before we can expect AI to generate outputs that are genuinely inclusive and authentically representative.

2. Navigating our human bias

The second step to making AI an ally to inclusive communications is self-reflection. We’re human, and we’re fallible, and it is important to remember that in the context of EDI. As humans, we do form stereotypes – it’s a coping mechanism and our brain’s attempt to simplify the barrage of information we encounter daily.

We must remain vigilant – consciously slowing down and actively recognising these stereotypes within ourselves so we do not bring them into our communications with AI.

3. Increasing awareness of our unconscious biases

Unconscious bias refers to the automatic attitudes and stereotypes that influence our judgments and actions without our conscious awareness. Shaped by our experiences and societal influences, these biases impact how we view others.

If you’re considering using AI within your communications, then you must understand what your own unconscious biases are. The Harvard IATs – Implicit Association Tests – are a useful tool to help you begin to do this. Set up by a collaboration of US researchers in 1998, Project Implicit aims to collect data on our biases as we learn about ourselves. We’d recommended picking a test identity characteristic you think you don’t carry bias on and one you think you do – and see how it plays out. These tests can help you identify where your unconscious biases could influence AI.

4. Learning from our mistakes

AI is still a relatively new tool for many of us – we are still learning how to get the best out of ChatGPT or how to write an effective prompt on Midjourney. We are naturally going to make mistakes as we learn how to use different AI platforms. But we must learn from these and identify where we perhaps need to reword a prompt or change the language, we are using to generate more inclusive results. By taking our time to craft prompts carefully to guide unbiased outcomes we can minimise our mistakes and foster greater inclusion.

But what about if AI makes a mistake and leans on bias or stereotypes? We can help it learn from its mistakes too! By offering corrective feedback, we can help steer AI responses towards being more inclusive.

Navigating AI together

Our ‘Navigating AI together’ workshop series has been providing a safe and open space for communicators to discuss various aspects of AI.

This time, recognising the pressing need, we’re focusing on intellectual property and copyright issues. It’s an area that many communicators have been struggling to grapple with, so in our next session, on Friday 15th March, we’re going to delve into it together.

We are delighted to be welcoming Alex Collinson, from Herrington Carmichael, who specialises in commercial and intellectual property law matters. Alex will lead an insightful discussion covering copyright, brand protection, confidentiality concerns, and real-world cases of AI IP infringement.

Navigating AI Together: Making AI an ally to inclusive communications

By Sally Pritchett

CEO

How can we tackle AI bias for more inclusive and authentic representation?

At our recent ‘Navigating AI Together’ roundtable, we delved into the critical issue of biases within AI and how we can look to overcome the in-built bias and use AI as a tool to foster inclusion and authentic representation.

We were delighted to welcome Ali Fisher, a seasoned expert in fostering sustainable, diverse, equitable, and purpose-driven business practices. With a background including leadership at Unilever and the Dove Self-Esteem Project, Ali brought a wealth of knowledge and experience in the realm of DE&I. Her invaluable insights provided fresh perspectives on navigating AI’s impact on communications.

Unravelling bias in AI

Generative AI offers amazing opportunities for communicators, but its power comes with a challenge: inherent biases. Generative AI has been trained on human-created content, and so has inherited deep-seated bias built in. This bias can, and often does, unintentionally permeate AI-generated content, reinforcing stereotypes and misconceptions.

It’s been well documented and discussed over the last year that generative AI takes bias and stereotyping from bad to worse – with Bloomberg publishing headlines like ‘Humans are biased, generative AI is even worse’. This bias is of course very worrying when we’re also seeing reports that 73% of users globally already say they trust content created by generative AI.

But let’s go back a step. While generative AI may be biased due to the training data that feeds it, what about the conditions under which the AI tools themselves are developed?

The lack of diversity within the tech industry adds complexity. The gender disparity is evident, with only 22% of the UK tech sector and 21% of US computer science degree earners being women. One study showed that code written by women was approved the first time round more often than men’s, but only if the gender of the coder was hidden. If the gender was revealed, the data showed that women coders received 35% more rejections of their code than men.

Race and ethnicity disparities in tech are also concerning. Looking at the US and a report from the McKinsey Institute of Black Economic Mobility, Black people make up 12% of the US workforce but only 8% of employees in tech jobs. That percentage is even smaller further up the corporate ladder, with just 3% of tech executives in the C-suite being Black. It’s believed that the gap will likely widen over the next decade.

Nurturing AI as responsible guides

During our ‘Navigating AI together’ roundtable, an analogy was shared: AI is like a toddler trying to cross a busy road. Like how we wouldn’t allow a toddler to wander into traffic alone, we must hold the hand of AI and safely guide it.

We need to understand the EDI landscape thoroughly first, becoming adept guides before we can expect AI to generate outputs that are genuinely inclusive and authentically representative. As humans, we need to be responsible AI users, always giving a guiding hand. The first step to making AI an ally to inclusive communications is self-reflection.

Navigating our human bias

We’re human, and we’re fallible, and it is important to remember that in the context of EDI.

In one study, researchers observed 9-month-old babies, evenly divided between Black and white infants. They were all equally exposed to both Black and white adults, all unknown to them. The white babies consistently gravitated toward the white adults, while the Black infants showed a preference for the Black adults. This inclination toward familiarity emerged as early as nine months, suggesting an inherent comfort with those we perceive as similar.

As humans, we tend to categorise. We employ schemas and, yes, stereotypes as well. It’s a coping mechanism and our brain’s attempt to simplify the barrage of information we encounter daily. Yet, this simplification comes with a call for heightened awareness. We need to consciously slow down, be vigilant and actively recognize these tendencies within ourselves.

Increasing awareness of our unconscious biases

Unconscious bias refers to the automatic attitudes and stereotypes that influence our judgments and actions without our conscious awareness. Shaped by our experiences and societal influences, these biases impact how we view others.

If you’re considering using AI within your communications, then you must understand what your own unconscious biases are. The Harvard IATs – Implicit Association Tests – are a useful tool to help you begin to do this. Set up by a collaboration of US researchers in 1998 Project Implicit aims to collect data on our biases as we learn about ourselves. We’d recommended picking one identity characteristic you think you don’t carry bias on and one you think you do – and see how it plays out.

Exploring bias in generative AI

Moving on from understanding why generative AI contains bias and recognising how our biases influence our perceptions, let’s shift our focus to examining the actual AI outputs. You likely have already encountered biased outputs from AI, but in our session, we made several comparisons between the results of Google’s image search results algorithm and the outputs from generative AI tools ChatGPT and Midjourney.

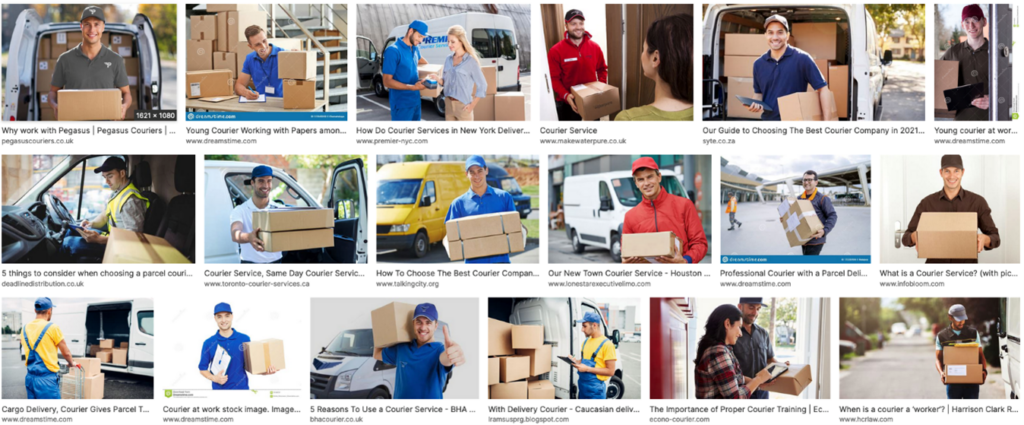

Let’s start with a familiar scenario: the image of a courier. When you think of a courier – the person who delivers your Amazon packages – what’s the immediate mental picture that springs to mind?

A quick Google image search result shows a courier as a man carrying a box, often with a van. This representation is the outcome of the content humans have uploaded – it’s not a product of machine learning.

Now, let’s compare it to what AI, drawing from its training data, perceives as a courier’s life.

When we prompted ChatGPT to describe a day in the life of a courier, it conjured a narrative around a character named Jake.

Similarly, looking at Midjourney’s output, we have images suggesting men with boxes and motorbikes as representations of couriers.

Over the course of the roundtable, we shared and discussed many examples showing the bias of AI. To get a better understanding of this, we recommended watching Amy Web’s presentation at the Nordic Business Forum. Amy revealed how AI mirrors human biases. From CEOs to tampons, AI struggled.

It’s safe to say that AI does not challenge the perception of who a person could be. It often reflects society’s most ingrained stereotypes at us and fails to accurately reflect a range of EDI characteristics that humans have.

AI and authentic representation

There are only four EDI identity characteristics that we see or perceive easily – tone of voice, mannerisms, attire, and skin colour. Everything else requires more information from the individual. We can’t accurately assume someone’s age, gender, sexual orientation, race or ethnicity. We can’t assume whether someone has a disability or not.

So how does AI fare when it comes to navigating these visible and invisible EDI characteristics?

If you ask Midjourney to show you construction workers, you’ll likely get something like this, with a clear lack of visible diversity among the four images.

We then asked Midjourney to depict construction workers with a disability. The generated images were all very similar, with three of the four depicting the construction worker as a wheelchair user.

We then asked Midjourney to depict LGBTQIA+ construction workers. This output really shows the propensity of AI to stereotype.

When it comes to minority groups, AI seems to at best lean on lazy stereotypes, and at worst create an offensive parody of reality. These results show how important it is for us to be hyper-aware of EDI within our communications when using AI, and to hold the hand of that toddler crossing the road tightly!

How to make AI an ally to inclusive communications

As the human communicators guiding the hand of AI, reducing our personal bias has to be the first step:

- Engage in the Harvard IATs to heighten awareness of your unconscious biases

- Be prepared to get it wrong and learn from your mistakes

- Evaluate the diversity in your social and professional circle

- Challenge yourself to culture-add, not culture-fit

- Practice active listening, valuing others’ perspectives over your own voice

With that foundation in place, our top tips for reducing AI bias are:

- Craft prompts carefully to guide unbiased outcomes

- Offer contextual details for to help AI better understand your expectations and requirements

- Fact check for genuine and authentic representation in all AI-generated content

- Offer corrective feedback to steer AI responses towards inclusivity

- Develop ethical guidelines for all AI users and undertake through training

Reach out to us or Ali for a deeper conversation on how you can cultivate a culture that embraces and understands the value of DEIB within your organisation.

To join our upcoming ‘Navigating AI Together’ session in 2024, please send an email over to hello@somethingbig.co.uk to stay in the loop.

Five AI trends we expect to see in 2024

By Sally Pritchett

CEO

Looking ahead to 2024, what AI trends can we anticipate?

This year, we have witnessed the rapid growth of AI. New platforms, applications, and software have emerged for almost every industry, including creative communications. However, in the ever-evolving landscape of AI, what trends can we expect to see in 2024?

Increased legislation

Nations across the globe are forming comprehensive AI policies in order to set regulations, drive innovative growth, and help ensure everyone can benefit from AI. As AI continues to advance, expect to see further guidelines and regulations introduced, including laws to prevent harmful content such as deepfakes. The speed at which AI evolves is set to continue in 2024, so we expect legislation will continually evolve to keep up with this new technology.

The importance of ethical AI

As AI becomes more widely adopted in 2024, we will see a continued focus on it being developed and used in a responsible way. There are already concerns about AI biases, plagiarism, accuracy, and a lack of transparency. However, despite these concerns, 73% of users trust content created by generative AI. This worrying statistic highlights how important it is that AI is used ethically. As we head into 2024, experts expect to see an increased interest in AI ethics education.

In the communications industry, honesty, accuracy and inclusivity are vital, so it’s important that we use AI in a responsible way. We believe it’s time for the communications industry to take the lead and set its own guidelines for ethical AI usage.

AI-enhanced creativity

AI is rapidly becoming a collaborative partner to people in many different job roles across a vast range of industries, including creative communications. Platforms such as Midjourney and Adobe Firefly have brought AI capabilities to the creative industry with tools such as generative fill. Whilst there is no replacement for human creativity, these tools can help creatives work more efficiently. As we move through 2024, expect to see these tools more widely adopted and new capabilities introduced.

The next generation of generative AI

Generative AI has advanced rapidly this past year, and the pace is set to continue. In 2024, generative AI is expected to advance further, from language model-based chatbots such as ChatGPT to video creation tools. Experts predict that AI applications and tools will become more powerful and user-friendly, new applications and capabilities will appear, and the difference between human and AI-generated content will become trickier to determine. Furthermore, we will start to see AI integrated into commonly used applications, such as the introduction of Microsoft 365 Copilot across the Microsoft 365 product suite.

AI as an intelligent assistant

As AI continues to be integrated into more commonly used applications and software, it is expected that it will start to become an intelligent assistant to us in our everyday work. From summarising lengthy PDF documents to grammar checking, and highlighting social media trends to researching hot topics, AI can help us work more efficiently. However, while AI offers opportunities to streamline processes and increase efficiency, we must still ensure responsible and ethical use.

Navigating AI together: overcoming bias and achieving inclusion

With the AI revolution upon us, we’ve been facilitating regular roundtable discussions to support communicators in navigating this change together.

In our next session we will examine the issue of the biases embedded in AI and explore how we can mitigate this bias and harness AI tools to foster greater inclusion and authentic representation.

Let’s continue Navigating AI Together, ensuring responsible, ethical, and inclusive AI usage in our communication practices.

Navigating AI Together: Accessibility, transparency and voice cloning

By Sally Pritchett

CEO

We're uniting communicators to navigate the ever-evolving AI landscape. Here, we share insights from our most recent 'Navigating AI Together' roundtable.

AI can be a scary topic. For many the opportunity for a big productivity boost is sitting alongside fears around role replacement and the devaluing of skills. When we heard these concerns from fellow communicators, we knew we needed to help. Our ‘Navigating AI Together’ forum is supporting communicators to manage the transition to AI together, safely, ethically, and with positive and open mindsets.

In our most recent session of Navigating AI Together, we delved into the current state of AI, strategies for enhancing communication accessibility and inclusivity, the importance of AI transparency, and even took a deep dive into the fascinating realm of AI voice cloning.

In previous sessions we have:

- Explored the role AI in communications, including the challenges and opportunities.

- Shared a guide to prompting, tailored to communication professionals.

- Discussed the creation of policies for responsible AI usage and user accountability.

- Provided a review of Midjourney.

- Examined AI-assisted video creation.

The current state of AI

In the latest ‘Navigating AI together’ session we reflected on the rapid growth of AI, especially with the recent emergence of generative AI, symbolised by ChatGPT’s meteoric rise. With the genie now out of the bottle, the AI landscape is evolving exponentially, leading to an influx of uses, applications, and platforms. However, experts are suggesting that we may not experience another technological leap of this magnitude anytime soon. Our collective responsibility is now to maximize AI’s potential while adhering to clear usage guidelines and responsible practices.

Notably, a concerning statistic has emerged, revealing that 73% of users trust content generated by generative AI. This really shows the importance of close supervision in AI-generated content, especially in the communication field, where reliability and truthfulness are paramount.

As for legislation and regulation, while it may be in development, progress will be slow and may not fully address the intricacies of AI usage. We believe that it’s time for the communications industry to take the lead in setting its own guidelines for AI usage.

However, despite all the disruption, there is a positive note. AI is already making a substantial positive impact in various domains, from improving cancer screening to addressing climate change, conserving wildlife, and fighting world hunger. The potential for AI to do good is vast and exciting.

How AI can enhance communication accessibility

Our role as communication professionals includes making our communication as effective as possible and ensuring it is accessible to everyone. Currently, fewer than 4% of the world’s top million websites are accessible to people with disabilities. AI can help accelerate progress in this regard by simplifying and enhancing communication:

- Copy simplification with AI editors – Tools like Hemmingway can simplify and improve readability, making communication more accessible.

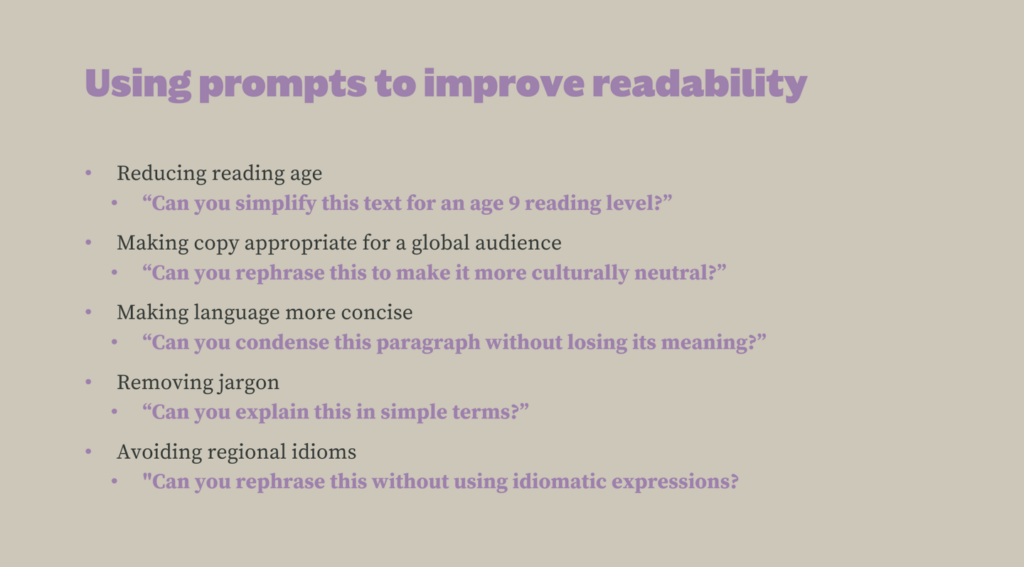

- AI prompts for readability – Using AI prompts to tailor text for different reading levels, cultures, and languages.

- Digital signing – New platforms in development, like Signapse, will be able to create sign language from text or audio, making communication accessible to people who use sign language.

- Translation – AI can help level up and speed up translation, providing accurate and natural translation, learning from feedback over time.

- Image descriptors – Tools like Verbit can create audio descriptions of images in real-time.

- Text to Speech / Speech to Text – Enhances accessibility for various communication modes.

- Multi-Lingual Chatbots – Assist in multilingual communication.

The importance of AI transparency

During the forum we addressed the significant issue of transparency in AI. We posed thought-provoking questions to the group about transparency and shared considerations for when, how, and whether to be transparent in our AI usage. Our conversations showed that transparency in AI isn’t a straightforward, black-and-white matter; rather, it exists on a spectrum with shades of grey. Determining when to be transparent about our use of AI can pose significant challenges.

Regulation has already driven the need for transparency in various digital domains, such as cookies on websites and advertisements in social media. Just as privacy regulations have mandated the disclosure of cookie usage, AI may could follow a similar path. Users may come to expect clear information about when AI is involved in content generation, and it may be time for communicators to rise to this challenge without the need for legislation.

AI voice cloning

Voice cloning tools use AI and deep learning algorithms to replicate and mimic human voices. These tools analyse the vocal characteristics, pitch, tone, and nuances of a person’s voice from a sample of audio recordings. Once trained, they can generate speech that closely resembles the original speaker’s voice, allowing for the creation of natural-sounding, synthetic voices.

AI voice cloning has numerous applications, from creating realistic voice assistants to generating voiceovers for content. However, it also raises ethical concerns about potential misuse and impersonation, highlighting the need for responsible use and regulation in this rapidly advancing field.

We investigated several voice cloning tools and shared our findings with the group’s attendees. If you’d like to find out more then please reach out.

Navigating AI together: overcoming bias and achieving inclusion

In our next session we will examine the issue of the biases embedded in AI and explore how we can mitigate this bias and harness AI tools to foster greater inclusion and authentic representation.

Let’s continue Navigating AI Together, ensuring responsible, ethical, and inclusive AI usage in our communication practices.

Navigating AI together: AI policy insights, exploring AI-assisted video and MidJourney platform review

By Sally Pritchett

CEO

We are bringing communicators together to navigate the ever-changing landscape of AI. Here, we share essential insights from our second 'Navigating AI Together' roundtable.

Some view AI as a tool for enhancing productivity, while others are understandably expressing concerns about its impact on jobs and the value of creative and communication skills. Recognising these concerns among our network of communication professionals, we’ve established a safe space for collaborative learning about AI and its ethical, responsible usage. Our commitment is to empower our community to embrace AI positively and with an open mind as we collectively explore its potential.

In our second ‘Navigating AI Together’ roundtable held in September, we delved into AI policies and governance, AI-assisted video creation, and shared our review of the generative AI tool Midjourney. One significant takeaway from the discussion was that while AI may boost productivity, it isn’t necessarily reducing workloads; it simply reshapes it.

For insights from our first roundtable, check out the write-up here, where we explored how communications influences workplace culture, employee experience, productivity, sales, customer service, and innovation.

AI insights: policies and governance

We were pleased to be joined by marketing expert, Danny Philamond, from fellow B Corp business, Magnus Consulting. Danny has been advising clients on AI usage, and during our discussion, he shed light on the importance of creating AI policies and governance.

Potential risks of AI:

When considering risks, we focused on marketing and communications:

- Sharing confidential data with third parties poses a significant risk. For example, OpenAI (the creators of ChatGPT) say within their terms of service that they may share data with their vendors (which is potentially up to 80 plugins as of writing). Anything you share with an AI tool has a potentially massive reach. However, within ChatGPT for example you can look to limit this extension by deselecting chat history and training, reducing your data transfer.

- When using an AI tool, think about whether you would want the information to be made public.

- Even if information isn’t strictly confidential, it might still be privileged. Consider whether you’d want AI to learn your product strategies, and potentially share that insight with competitors.

- If you’re using your knowledge and experience to refine AI outputs, and then feeding those back into the model, you’re enabling your unique insights to train the AI. Is that something you want to freely share?

Guidance for advising teams and suppliers:

Emphasise responsible usage:

- Individual accountability is crucial, as policies can’t cover every AI use, given the rapid evolution of the field.

- Maintain transparency, indicating when content is AI-generated.

- Integrate AI usage, accountability, and transparency into your due diligence processes.

Brands and transparency:

- Nearly three-quarters of consumers believe that brands should disclose the use of AI-generated content, according to a recent IPA study. Currently, it’s up to brands to decide on transparency.

- Some AI companies, including OpenAI, Alphabet, and Meta, have made voluntary commitments to watermark AI-generated content, which could become enforced regulation.

- Consider the impact of transparency: imagine if Photoshop or Instagram filters were required to disclose image alterations when they first became popular. Such transparency could have positively affected the growing challenges we’re seeing around self-esteem and mental health. We have the opportunity, and perhaps the moral responsibility, to be transparent about AI-generated content.

Break down company-wide AI policies:

- Build flexibility into policies, as the AI landscape evolves rapidly. Plan regular policy reviews.

- Provide training on the guidelines, ensuring everyone understands AI terminology and limitations.

- Support teams in utilising AI tools for efficiency while managing associated risks.

Recommendations for AI policies and guidelines:

As a starting point, all policies should consider:

- User accountability and responsible usage.

- Best practices for effective AI usage.

- Safe AI usage.

- Activity to avoid and risk factors.

- Data handling and protection.

- Compliance and governance.

- Training and feedback loops.

AI use case: Exploring AI-assisted video creation

During the previous session, we found that the vast number of AI tools and opportunities was overwhelming to many communicators, and so during this session, we turned our focus to just one potential use case: AI-assisted video creation. We shared an example of an AI-assisted video that we would be able to produce for clients, using the example of translating an employee policy handbook into a digestible animation (please get in touch if you’d be interested in watching this video and finding out more about the process involved). These videos can be quick to create, have the potential to be considerably more affordable than traditional video, be translated into multiple languages and can bring inaccessible documents to life.

After viewing the video, our group identified that AI-assisted video creation can be a solution for communication projects that often receive limited attention and budget, such as:

External communications:

- Product descriptions

- Platform demos

- Customs advice

- Product or service specifications

- Regulatory advice

Internal communications:

- Health and safety materials

- Employee handbooks

- Systems training

- Onboarding documents

- Policy materials

AI platform review: Midjourney

Midjourney is a generative AI program and service that produces images from natural language descriptions (called prompts). After submitting a prompt, Midjourney generates four different images, which you can then edit, or tailor your prompt to refine the concepts. Midjourney can create any style of image you can think of. Within our Midjourney platform review, we cover how to use it, subscriptions and licensing, prompt writing, and the pros and cons of using the platform from a communications perspective. You can download the full platform review here.

Navigating AI together: overcoming bias and achieving inclusion

In our next session we will examine the issue of the biases embedded in AI and explore how we can mitigate this bias and harness AI tools to foster greater inclusion and authentic representation.

The art of AI: Midjourney guide and review

By Sally Pritchett

CEO

Download our free Midjourney guide and review.

Are you considering if Midjourney could be the right platform for you? Our creative team have put the AI tool to the test. Within this free guide we cover:

- How to use Midjourney

- Subscriptions and licensing

- Prompt writing

- The pros and cons from a communications perspective

What is Midjourney?

Midjourney is a generative artificial intelligence program and service. It generates images from natural language descriptions, called “prompts”. After submitting a prompt Midjourney will generate four different images to choose from, at which point you can then edit or tailor your prompt to refine your concept. From illustration to hyper-realism, Picasso to pixel art, Midjourney can create any style of image you can think of and a whole world you haven’t.

Is Midjourney for commercial use?

All Midjourney subscriptions and plans have general commercial terms, meaning outputs can be used as you wish, but they’re not exclusively yours. Images can be used and modified by other users within the Discord community. Essentially everything you create with Midjourney can be used by Midjourney and anyone else as well. You can’t copyright an image either.

Want to find out about how Midjourney could impact your communications? Get in touch.

Download your free guide to Midjourney

AI prompt guide for communications professionals

By Sally Pritchett

CEO

Download our AI prompt guide for communications professionals and start leveraging the power of AI.

AI is a tool, not a replacement. Our AI prompt guide for communications professionals empowers you to wield AI effectively in your content creation journey. It’s about enhancing, not replacing, your unique perspective and creativity. Harnessing the capabilities of AI has become a necessity for staying ahead, as the rise of AI tools, like ChatGPT, has revolutionized the way we approach research and content creation.

Getting started with AI

Our AI prompt guide is designed to be your go-to companion on your AI journey. Whether you’re new to AI or looking to refine your prompting skills, this guide is your roadmap to effective content creation. It offers easy-to-follow instructions on creating prompts that yield useful results, ensuring your interactions with AI are both productive and efficient.

Train ChatGPT to write in your brand voice

Discover how to craft prompts that align with your brand’s unique writing style and communication goals.

Download Your AI Prompt Guide

Ready to harness the power of AI to elevate your content creation? Don’t miss out on the AI Prompt Guide for Communicators. Download your copy now.

Stay ahead, stay creative, and let AI amplify your impact.

Download your free prompt guide here

Navigating AI together: uniting communicators to embrace change

By Sally Pritchett

CEO

Change can be unsettling, especially when we’re not prepared for it.

With the AI revolution upon us, we are bringing together communicators to navigate this change together. Here are some key insights from our first roundtable.

AI can be an emotional topic, and for many, the opportunity for a big productivity boost sits alongside fears around role replacement and the devaluing of skills. Hearing concerns from fellow communicators, we have created a forum ‘Navigating AI together’ to enable us to manage the transition to AI together, safely, ethically, and with positive and open mindsets.

In our first roundtable, we discussed how communications is a driving force that shapes workplace culture, employee experience, productivity, sales, customer service, and innovation. We know it’s not time to hand over communications to robots, but rather to put our focus on leveraging the potential of AI, alongside human expertise. Here is a summary of some of the key conversations that were had during the first ‘Navigating AI together’ roundtable.

AI and communications: setting the scene

At the recent Simply IC conference, established internal communicators explored the impact of AI in their field. During the discussions, they found that ‘AI is generally welcomed by the communications community although still quite scary’.

In a reassuring address at the Simply IC conference, generative AI expert and author Nina Schick emphasised that AI makes communication more important than ever. Instead of replacing the role of communicators, it is likely to highlight the need for real human communication.

Additionally in the roundtable, we discussed how uptake of AI might be slower than the media would suggest. Insights from a survey of 2,000 people in the US shed light on the current state of mainstream AI usage. The results indicated that AI adoption remains relatively limited, with only one in three individuals having tried AI-powered tools. Moreover, most people are not familiar with the companies responsible for these innovations. Notably, younger generations, particularly millennials, lead in embracing AI, while boomers appear to lag in its adoption.

Opportunities and challenges in AI for communicators

Communicators are often juggling large workloads, always trying to do more with tighter budgets and tighter deadlines. If your CEO came to you today and said I’m giving you a budget for an extra head in your team, their role is just to support you, you probably wouldn’t say no. AI has the potential to be a very useful tool in the box.

During our first ‘Navigating AI together’ roundtable, communicators identified several opportunities surrounding AI in communications:

- Simplifying contracts and legal language, making complex concepts understandable.

- Distilling white papers and reports for easier comprehension with tools like ChatPDF.

- Supporting pre-meeting research, providing insights for better preparation.

- Quickly generating thought-starters and creative prompts with tools like AI.

- AI-powered voice narration making video content a more accessible and affordable channel (while considering concerns around cloning voices and deep fakes).

- Ensuring content from multiple sources has a consistent tone of voice.

- Boosting the accuracy, speed, and accessibility of data analytics.

- Accelerating the content creation processes, acting like an intern while prioritising accuracy.

- Supporting annual and quarterly reporting, especially in understanding legislation, regulations, and sustainability challenges.

However, with great power comes great responsibility. The communicators attending the roundtable were cautious of the risks around AI, including:

- The potential for content plagiarism.

- Voice and video cloning leading to the threat of deep fakes.

- Rapid advancements impacting job roles.

- The need to double-check all AI-generated content for consistency and accuracy.

- Over-reliance on AI, leading to dependence issues and deskilling.

- The limitations AI has in intelligence, only being able to use borrowed information.

- Ambiguities in policies, guidelines, and law.

- The ethical considerations surrounding original IP, ideas and, creative.

Navigating AI together: overcoming bias and achieving inclusion

In our next session we will examine the issue of the biases embedded in AI and explore how we can mitigate this bias and harness AI tools to foster greater inclusion and authentic representation.